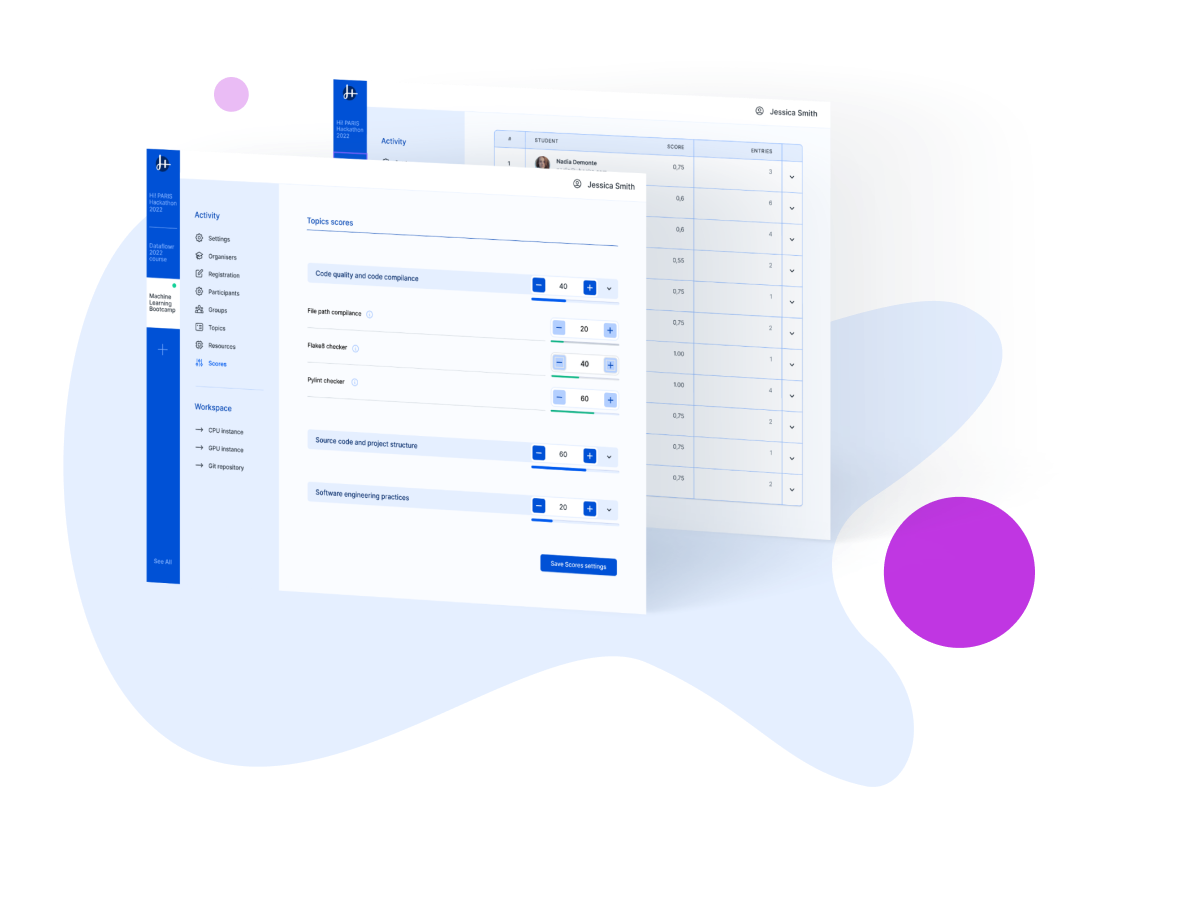

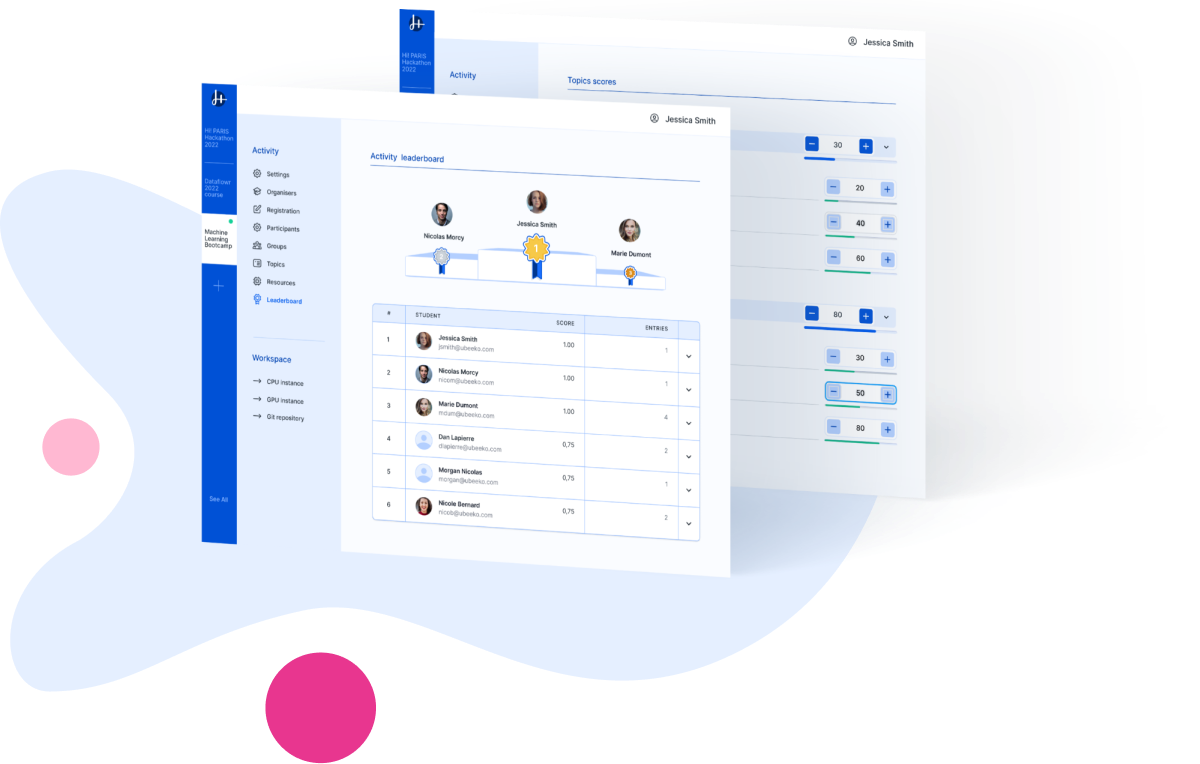

Simple or in-depth model scoring

You get to choose! Either you rank the participants based on how well their data submission predicts outcomes, using our preloaded evaluation metrics. Or you start from the models delivered by the participants, eventually use custom metrics and extend your analysis towards model compute efficiency or interpretability.